Recently we had an issue when adding a new table to a Cassandra cluster (version 3.11.2). We added a new table create statement to our Java application, deployed our application to our Cassandra cluster in each of our environments, the table was created, we could read and write data, everything was fine.

However, when we deployed to our production Cassandra cluster, the new table was created, but we were unable to query the table from any node in the cluster. When our Java application tried to do a select from the table it would get the error:

Cassandra timeout during read query at consistency LOCAL_ONE (1 responses were required but only 0 replica responded)

We tried connecting to each node in the cluster and using CQLSH, but we still had the same issue. On every node Cassandra knew about the table and we could see the schema definition for the table, but when we tried to query it we would get the following error:

ReadTimeout: Error from server: code=1200 [Coordinator node timed out waiting for replica nodes' response]

message="Operation timed out - received only 0 responses." info={'received_response': 0, 'required_response': 1, 'consistency': 'ONE'}

We decided to try describe cluster to see if we could get any useful info:

nodetool describecluster

There was our problem! We had a schema disagreement! Three nodes of our six node cluster were on a different schema:

Cluster Information:

Name: OurCassandraCluster

Snitch: org.apache.cassandra.locator.SimpleSnitch

DynamicEndPointSnitch: enabled

Partitioner: org.apache.cassandra.dht.Murmur3Partitioner

Schema versions:

819a3ce1-a42c-3ba9-bd39-7c015749f11a: [10.111.22.103, 10.111.22.105, 10.111.22.104]

134b246c-8d42-31a7-afd1-71e8a2d3d8a3: [10.111.22.102, 10.111.22.101, 10.111.22.106]

We checked DataStax, which had the article Handling Schema Disagreements. However, their official documentation was sparse and was assuming a node was unreachable.

In our case all the nodes were reachable, the cluster was functioning fine, all previously added tables were receiving traffic, it was only the new table we just added that was having a problem.

We found a StackOverlow post suggesting a fix for the schema disagreement issue was to cycle the nodes, one at a time. We tried that and it did work. The following are the steps that worked for us.

Steps to Fix Schema Disagreement

If there are more nodes in one schema than in the other, you can start by trying to restart a Cassandra node in the smaller list and see if it joins the other schema list.

In our case we had exactly three nodes on each schema. In this case it is more likely the nodes in the first schema are the ones that Cassandra will pick during a schema negotiation, so try the following instructions on one of the nodes in the second schema list.

Connect to a node

Connect to one of the nodes in the second schema list. For this example lets pick node “10.111.22.102”;

Restart Cassandra

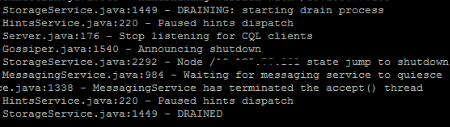

First, drain the node. This will flush all in memory sstables to disk and stop receiving traffic from other nodes.

nodetool drain

Now, check the status to see if the drain operation has finished.

systemctl status cassandra

You should see in the output that the drain operation was completed successfully.

Stop Cassandra

systemctl stop cassandra

Start Cassandra

systemctl start cassandra

Verify Cassandra is up

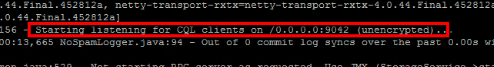

Lets check the journal to ensure Cassandra has restarted successfully

journalctl -f -u cassandra

When you see the following message, it means Cassandra has finished restarting and is ready for clients to connect.

Verify Schema Issue Fixed For Node

Now that Cassandra is back up, run the describe cluster command again to see if the node has switched to the other schema:

nodetool describecluster

If all has gone well, you should see that node “10.111.22.102” has moved to the other schema list (Note: The node list is not sorted by IP):

Cluster Information:

Name: OurCassandraCluster

Snitch: org.apache.cassandra.locator.SimpleSnitch

DynamicEndPointSnitch: enabled

Partitioner: org.apache.cassandra.dht.Murmur3Partitioner

Schema versions:

819a3ce1-a42c-3ba9-bd39-7c015749f11a: [10.111.22.103, 10.111.22.102, 10.111.22.105, 10.111.22.104]

134b246c-8d42-31a7-afd1-71e8a2d3d8a3: [10.111.22.101, 10.111.22.106]

If Node Schema Did Not Change

If this did not work, it means the other schema is the one Cassandra has decided is the authority, so repeat these steps for the list of nodes in the first schema list.

Fixed Cluster Schema

Once you have completed the above steps on each node, all nodes should now be on a single schema:

Cluster Information:

Name: OurCassandraCluster

Snitch: org.apache.cassandra.locator.SimpleSnitch

DynamicEndPointSnitch: enabled

Partitioner: org.apache.cassandra.dht.Murmur3Partitioner

Schema versions:

819a3ce1-a42c-3ba9-bd39-7c015749f11a: [10.111.22.103, 10.111.22.102, 10.111.22.101, 10.111.22.106, 10.111.22.105, 10.111.22.104]

I hope that helps!